How was this content?

Seeing is understanding: Twelve Labs pioneers AI video intelligence on AWS

Sight is the dominant sense in most humans, and it has a profound impact on how we interpret the world around us. What we perceive, how we learn, think, and navigate our surroundings is all heavily mediated by vision. But even those blessed with perfect eyesight are limited by how much information the visual cortex can process at any given moment. Thankfully, technology can go beyond what biology provides.

Twelve Labs is a fast-growing startup using generative AI to process vast amounts of video data, empowering its customers with next generation video intelligence. The company is training and scaling multimodal AI models on AWS that are capable of interpreting visual data as we do, at a scale made possible by innovative technology and proven expertise.

Putting a big picture idea into motion

Twelve Labs is a South Korean startup focused on AI-powered video intelligence. The company was co-founded by Jae Lee in 2020 and has offices in Seoul and San Francisco. “Twelve Labs is an AI research and product company building video foundation models for enterprises and developers,” says Lee. “Even before we learn how to speak or write, we gather a lot of different aspects of the world through the sensory input data by interacting with it, and we think that that is the better way to build models.”

Twelve Labs was founded at a time when the burgeoning AI market mainly focused on text or images. “When we started the company, people didn't really talk about multimodal or even use the term ‘foundation model’. We were seeing this trend of labs and companies trying to tackle intelligence through understanding language. But then we saw an opportunity—also frankly, a really hard challenge—in tackling video. That’s when we started working on perceptual reasoning.”

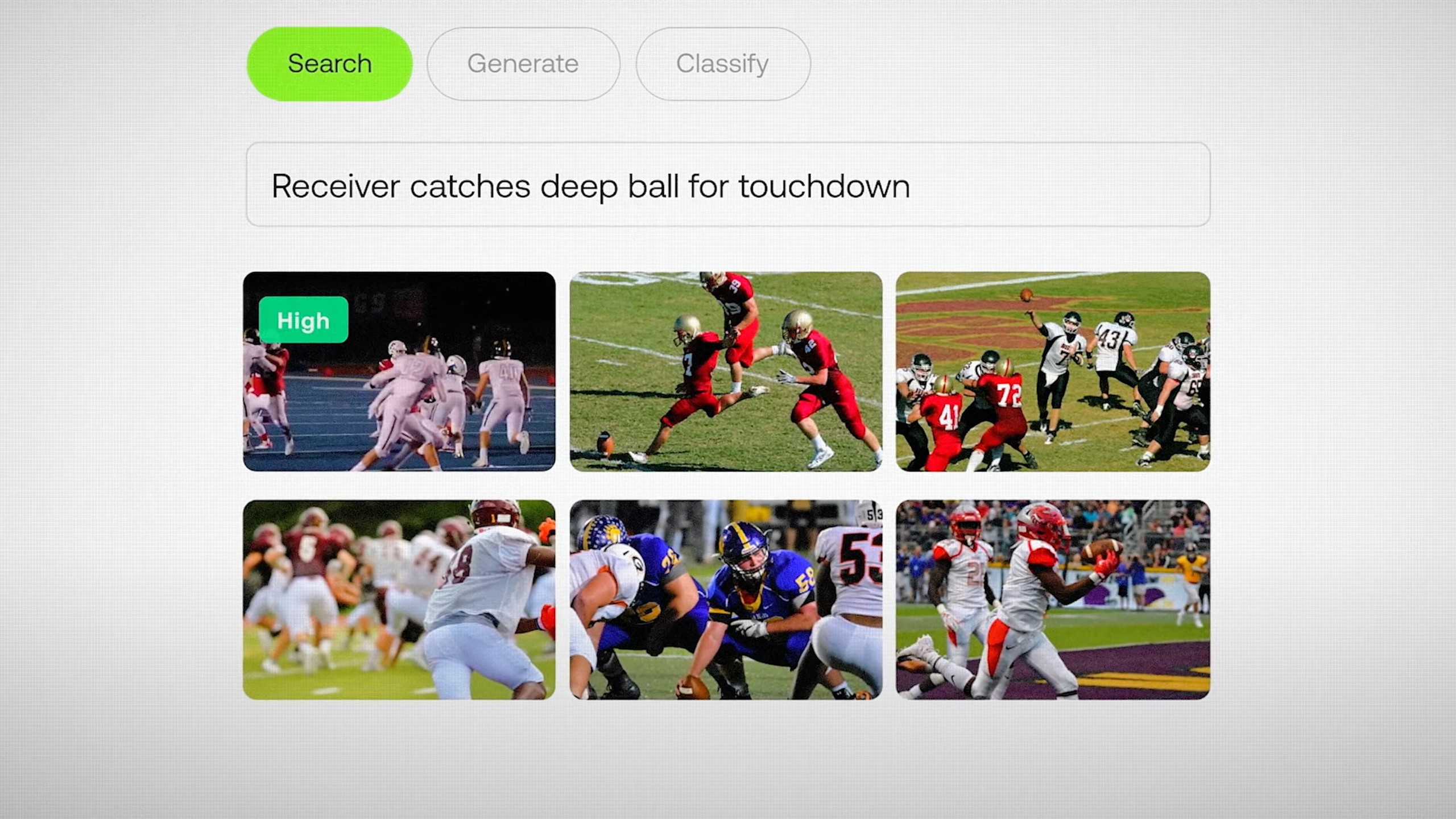

“Our focus area is around traditional video understanding problems like semantic search across large archives, or classification, video chat—even video agents and Retrieval-Augmented Generation (RAG),” says Lee. “If you have a lot of video data and you need to be able to search for things really quickly, Twelve Labs’ API allows you to do that in minutes.” Today, more than 30,000 developers and companies are using Twelve Labs models, including influential brands like the NFL.

Generating insight frame-by-frame

Twelve Labs offers two models, Marengo and Pegasus. “Marengo is dedicated to generating rich multimodal video embeddings that allow you to power any retrieval, meaning images, audio, video, and text,” says Lee. “Pegasus is our video language model, which is able to combine user prompts and the embeddings that Marengo generates to answer user questions, generate reports, and more.”

“We do a lot of novel research around architecture models that are more suited for video. There’s a bunch of text and image embedding models, but video is an entirely different beast. That's the research side of things—but the nitty gritty engineering work still needs to be done,” says Lee.

Thankfully, AI startups are used to tackling significant technical challenges and Twelve Labs is no different. “Effectively 80 percent of the world’s data is video, which amounts to over 100 zettabytes worth of video content that we first use to train and also index and understand,” says Lee. “The challenge here is the sheer scale.” Twelve Labs is working with AWS to overcome that challenge with access to the technology and expertise needed to reach its goals.

Powering perceptual reasoning with innovative technology

Twelve Labs is using Amazon SageMaker HyperPod to train and scale its models more efficiently. Businesses use SageMaker HyperPod to train FMs for weeks or even months while actively monitoring cluster health and leveraging automated node and job resiliency. If a faulty node is detected, it’s automatically replaced and model training resumes—saving up to 40 percent of training time.

“One of the most challenging things about building these models is that we are working with really powerful machines at an incredible scale, from hundreds of GPUs to tens of thousands of CPUs,” says Lee. “Even though these machines are really well built and robust, there are a lot of hardware and node failures.”

“We work closely with the SageMaker HyperPod team. We leverage the resilience and the distributed training infrastructure that AWS has built, which allows us to spin up GPUs, train our models as quickly as possible, and ship them,” says Lee. “The resilience of SageMaker HyperPod, the ability to fix dead nodes and basically outsource high performance computing was really appealing to us.”

The Twelve Labs team also leverage AWS Elemental MediaConvert for cloud-based video transcoding, removing the need to maintain video processing infrastructure. “The AWS Elemental MediaConvert streaming infrastructure allows us to focus on what we're really good at,” says Lee.

Twelve Labs also provides deep integration with Amazon Simple Storage Service (Amazon S3), an object storage service offering industry-leading scalability, data availability, security, and performance. “Our customers really enjoy the seamless integration of Twelve Labs and S3 workflows,” says Lee. “If you store the vast majority of your data on S3, we can seamlessly pull your video data, index, embed, and enable frictionless search.”

Bringing growth into focus

AWS has also helped Twelve Labs power growth through AWS Activate, a flagship program that provides cloud credits, technical support, and business mentorship for startups. The AWS Startups team comprises founders, builders, and visionaries who not only understand the challenges of running startups but have lived it and have the experience to support others throughout their journey. That includes finding the right AWS services for their use case, funding an initial proof-of-concept, and more.

A key part of AWS Activate is helping startups develop go-to-market strategies and increasing exposure to new customers. As part of that process, Twelve Labs joined AWS Marketplace, a curated digital storefront that enables the company to seamlessly deliver its video intelligence services to a global customer base. Companies of all sizes can now use AWS Marketplace to quickly find, try, buy, deploy, and manage Twelve Labs products.

AI that sees the world as we do

Going forward, Twelve Labs will continue to collaborate with AWS and break new ground in AI-powered video intelligence. “The most compelling reason to be excited about working with AWS is the shared empathy for our customers who are dealing with petabytes—or even exabytes—worth of video data,” says Lee.

“AWS has given us the compute power and support to solve the challenges of multimodal AI and make video more accessible, and we look forward to a fruitful collaboration over the coming years as we continue our innovation and expand globally,” says Lee. “We can accelerate our model training, deliver our solution safely to thousands of developers globally, and control compute costs—all while pushing the boundaries of video understanding and creation using generative AI.”

As part of a three-year Strategic Collaboration Agreement (SCA), the company is now working with AWS to further enhance its model training capabilities and deploy its models across new industries like healthcare and manufacturing. “We want to be the visual cortex for all future AI agents—agents that need to see the world the way we do,” says Lee.

How was this content?